Artificial Intelligence-Enhanced Neurocritical Care for Traumatic Brain Injury : Past, Present and Future

Article information

Abstract

In neurointensive care units (NICUs), particularly in cases involving traumatic brain injury (TBI), swift and accurate decision-making is critical because of rapidly changing patient conditions and the risk of secondary brain injury. The use of artificial intelligence (AI) in NICU can enhance clinical decision support and provide valuable assistance in these complex scenarios. This article aims to provide a comprehensive review of the current status and future prospects of AI utilization in the NICU, along with the challenges that must be overcome to realize this. Presently, the primary application of AI in NICU is outcome prediction through the analysis of preadmission and high-resolution data during admission. Recent applications include augmented neuromonitoring via signal quality control and real-time event prediction. In addition, AI can integrate data gathered from various measures and support minimally invasive neuromonitoring to increase patient safety. However, despite the recent surge in AI adoption within the NICU, the majority of AI applications have been limited to simple classification tasks, thus leaving the true potential of AI largely untapped. Emerging AI technologies, such as generalist medical AI and digital twins, harbor immense potential for enhancing advanced neurocritical care through broader AI applications. If challenges such as acquiring high-quality data and ethical issues are overcome, these new AI technologies can be clinically utilized in the actual NICU environment. Emphasizing the need for continuous research and development to maximize the potential of AI in the NICU, we anticipate that this will further enhance the efficiency and accuracy of TBI treatment within the NICU.

INTRODUCTION

Traumatic brain injury (TBI) is one of the leading causes of death and disability worldwide, with the estimated, global incidence of 27–69 million cases annually [8]. TBI can result in a range of progressive long-term physical and psychosocial impairments, including difficulties with cognitive abilities such as attention and memory, neurological symptoms such as headaches and dizziness, neuropsychiatric disorders, and an increased likelihood of neurodegenerative diseases later in life [120].

TBI can be classified into two types : primary and secondary. The former refers to the immediate consequences of the initial trauma, and as such, these effects are irreversible. Secondary brain injury refers to a series of events triggered by physiological responses following initial damage that may result in additional brain injury and worsen patient prognosis [81]. Therefore, the primary objective of TBI management in neurointensive care units (NICUs) is to prevent, evaluate, and treat these secondary insults. This involves implementing measures such as maintaining sufficient oxygenation and blood pressure, controlling intracranial pressure (ICP) and cerebral perfusion pressure (CPP), and providing appropriate nutritional support [57,121].

Despite major advances in our understanding of TBI, numerous challenges persist in managing this complex and heterogeneous condition. One of the primary challenges arises from the heterogeneity of injury mechanisms, patient characteristics, and injury severity. As a result, there is a pressing need for patient-specific treatments [10,74]. To determine the most suitable treatment strategies, it is essential to take into account the degree of TBI severity, specific areas of the brain affected, and unique physiological responses. Artificial intelligence (AI) has the potential to play a critical role in meeting these needs by providing tools for personalized treatment plans and decision support [97]. Particularly in the management of TBI, numerous studies are being conducted, exploring the diverse application possibilities of medical AI. It can perform tasks such as classification, prediction, and segmentation of TBI patients, thereby offering more precise diagnoses and efficient treatment directions. Table 1 lists some of these studies, demonstrating the specific roles AI can play in TBI management. This table summarizes various research examples on how AI can contribute in phases like initial management, NICU management, and neuroprognostication for TBI patients, thus providing insights into the clinical applicability of AI.

AI-enhanced neurocritical care has the potential to significantly enhance the treatment outcomes and quality of life for patients with TBI. Although AI techniques have rapidly gained acceptance within the neurosurgical community [87,114], the same level of acceptance does not extend to the utilization of AI for TBI management. This brief review provides the core concepts of machine learning (ML) and an overview of ML applications in NICUs with a focus on patients with TBI. It further explores the use of real-time AI in the NICU environment and concludes by investigating the prospective roles of generalist medical AI (GMAI) and digital twins (DT) in the NICU.

ML FOR NEUROCRITICAL CARE DATA ANALYSIS

Core concepts of ML

ML is a subfield of AI that enables computers to identify patterns and rules inherent in data without being explicitly programmed [87]. A key characteristic of ML models is their ability to continuously improve their performance through experience. Although more recent ML techniques, such as reinforcement learning and generative learning, have yielded promising results in certain areas (e.g., surgical planning [26] and generating neuroimages [29], etc.), the conventional tasks of medical AI have primarily focused on two main approaches : unsupervised learning for identifying undefined patterns or reducing data dimensionality and supervised learning for classification and regression tasks [111]. While both techniques can be used concurrently, the primary application of ML in medical fields has been centered around supervised classification and regression for image classification and segmentation, diagnosis, and prediction of outcome and complications [114].

While it is true that most of these ‘conventional’ tasks could theoretically be achieved through statistical methods, such statistical models rely on specific assumptions regarding data distribution, which may not be valid in numerous situations [135]. Specifically, if the data have high dimensionality (i.e., many variables) and/or if the relationship between data points is nonlinear, statistical assumptions are likely to fail. Nonlinearity and high dimensionality are common in medical data, making ML particularly advantageous. Logistic regression (LR), naïve bayes, decision trees, K-nearest neighbors, random forest (RF), and support vector machine (SVM) are commonly used classification algorithms in the medical field [6,11,33,123]. Recently, the utilization of ML has been increasing in the medical field, not only in clinical practice but also in personalized treatment [71,110]. This trend has been further accelerated by the emergence of various decision tree variants and deep learning technologies [9,45,64,69,113,142].

Role of ML in pre-admission data analysis

It is crucial for patients with TBI to predict the risk factors and perform early diagnosis during the prehospitalization process [34]. In addition, it is essential to optimize the triage process by appropriately classifying patients based on the severity of brain injury for treatment or transportation. In this regard, ML may enable a rapid and accurate diagnosis and treatment planning for patients with TBI.

The early recognition of TBI is particularly important because it effectively improves patient outcomes [35,134,137]. Choi et al. [19] used pre-hospital information from emergency medical service (EMS) providers, such as the EMS ambulance run sheet and EMS trauma in-depth registry, to identify the risk factors. Through the application of various ML techniques, including the elastic net algorithm, which exhibited the best performance, we identified that the three most significant predictors of TBI-related outcomes were loss of consciousness, Glasgow coma scale (GCS) score, and light reflex. In a study conducted by Hale et al. [41], an artificial neural network (ANN) using computed tomography (CT) data was employed to predict the likelihood of clinically relevant TBI in pediatric patients aged <18 years who experienced non-penetrating head trauma.

Accurately classifying patients with TBI according to their trauma severity before or immediately after transfer to the emergency room is crucial for efficient early treatment and has a significant impact on their prognosis [1]. Abe et al. [1] aimed to detect traumatic intracranial hemorrhage (tICH) and differentiate patients with tICH from those without tICH using information collected by on-site EMS personnel, such as systolic blood pressure, heart rate, body temperature, and respiratory rate. In the testing set, extreme gradient boosting (XGBoost), a variant of decision tree, showed the best predictive performance among the various ML algorithms with an area under an area under the receiver operating characteristic curve (AUC) of 0.80. Moyer et al. [85] developed a predictive model to identify patients requiring emergency neurosurgery among those diagnosed with moderate-to-severe TBI during the prehospital assessment process. Fifteen prehospital predictors, including the GCS, initial systolic blood pressure, initial diastolic blood pressure, and initial oxygen saturation, were used. The CatBoost model, another decision tree variant, outperformed the other models with an AUC of 0.81.

Optimizing surgical timing with ML

Once TBI patients are admitted to the hospital, it is important to quickly identify which patients require surgical intervention and to intervene at the right time. ML can contribute to this treatment intervention process, helping medical professionals make better decisions. A number of studies have been conducted to classify the severity of patients and identify patients who need surgical intervention. For instance, Güler et al. [39] developed a classification model to rapidly evaluate the severity of TBI in emergency department admitted patients. An ANN model was used to classify the severity of TBI using trauma scores, GCS, and electroencephalography (EEG) data. The model showed high agreement with the neurologists’ decisions, with an 87% concordance rate. Habibzadeh et al. [40] focused on those with positive initial cranial CT scan results for moderate TBI, developing a model to differentiate between patients needing neurosurgical intervention and those who do not, based on age, gender, GCS, Marshall score, presence of hematoma, and midline shift score. The SVM model performed best with an F1 score of 0.83 and an AUC of 0.93. Currently, TBI patients take follow-up CT scans after initial scans to assess intracranial damage progression, leading to concerns over unnecessary radiation exposure. In this context, this research shows potential as an effective decision-making tool for predicting intracranial damage and identifying patients in need of medical intervention without follow-up CT scans.

ML is not just limited to patient classification, but also plays an important role in determining the appropriate time for medical intervention according to the individual patient’s situation [47,56,77,124]. Some studies explore the general consequences of delayed neurosurgical intervention regardless of type or patient situation of the surgery, while others focus on detailing the appropriate time of intervention for specific surgical procedures and situations. Hanko et al. [42] predicted mortality and functional outcomes for TBI patients who received primary decompressive craniectomy (DC) treatment, considering a variety of variables including medical records, clinical symptoms, and pre- and post-operative conditions. The RF-based predictive model used in this study achieved an AUC of 0.811 for 6-month mortality and 0.873 for outcomes, with surgery timing being an important variable. It concluded that early implementation of primary DC is essential for effectively relieving ICP and ongoing brain hemorrhage. Similarly, a study by Seelig et al. [109] examined the outcomes of patients in a coma due to traumatic acute subdural hematoma, investigating various variables. It found that delay in surgery was a significant factor, particularly noting that patients operated within 4 hours of injury had a 30% mortality rate, while those operated after 4 hours had a mortality rate rising to 90%.

Outcome prediction via ML

Seemingly moderate or minor cases of TBI can rapidly escalate to severe TBI, necessitating close surveillance [119]. The generated variables and parameters offer valuable insights into prognostic assessments. Indeed, outcome prediction after TBI has long been a major topic in neurocritical care [72], and the increasing availability of ML techniques has stimulated their active incorporation into this topic. Tu et al. [128] developed a predictive model for mortality risk using the Taiwan triage and acuity scale and 11 characteristic variables, including age, sex, and obesity. The LR algorithm exhibited the best predictive performance with an AUC of 0.925. Another study by Tunthanathip and Oearsakul [129] applied ML to predict functional outcomes in pediatric patients with TBI. GCS, hypotension, pupillary light reflex, and subarachnoid hemorrhage were established as important factors for predicting functional outcomes. Among the classification algorithms, SVM showed the highest performance, with an accuracy of 0.94. Finally, Farzaneh et al. [32] proposed an interpretable ML-based framework for predicting long-term functional outcomes in patients with TBI. This study implemented the XGBoost classifier using 18 electronic health record (EHR) variables. The final model achieved an accuracy of 0.7488 on the test set. The advantage of this study is that it provides SHAP (SHapley Additive exPlanations) contribution, allowing for easier interpretation of the model in medical decision-making, particularly for predicting long-term functional outcomes in patients with TBI.

ML for high-resolution data analysis during intensive care

Management of severe TBI often necessitates continuous monitoring of various signals such as ICP, arterial pressure, and electrocardiography (ECG). The sheer scale, nonlinearity, and high dimensionality of the resulting data warrant analysis using ML. ML has undoubtedly demonstrated great potential for analyzing continuous neuromonitoring data from patients with TBI, allowing for the prediction of expected outcomes [116,119,125].

First, EEG is actively used to predict outcomes in patients with TBI [7,82]. For example, Haveman et al. [43] used quantitative EEG (qEEG) to predict the outcomes of patients with moderate-to-severe TBI 12 months after injury. This was accomplished by creating predictive models using an RF classifier based on qEEG features, age, and mean arterial blood pressure (MAP), which were collected over 7 days post-admission. The model showed an AUC of 0.94 on the training set and 0.81 on the validation set. Similarly, Noor et al. [88] used the absolute power spectral density (PSD) in each EEG frequency band to predict the outcomes of patients with moderate TBI 12 months after injury. In this study, Random under-sampling boosted trees were used. It was found that the absolute PSD in the delta and gamma bands was the best outcome predictor. They demonstrated an AUC of 0.97 and 0.95, respectively.

In addition, ICP signal is a valuable tool that can be used to predict patient prognosis. Raj et al. [96] developed a dynamic mortality prediction model for patients with TBI in the NICU based on ICP and MAP. Dynamic features related to ICP, MAP, CPP, motor response, and eye response were generated. These were then used in a LR model to predict the mortality rate at 30 days post-injury. The algorithm achieved a maximum AUC of 0.84, demonstrating superior performance compared to IMPACT-TBI [118], which is one of the most widely accepted static prediction models with an AUC of 0.78. Pimentel et al. [93] also attempted to predict the mortality rate of patients with TBI by extracting dynamic features from ICP and MAP signals. A Gaussian process (GP) framework was used to extract dynamic features from physiological signals. This method has the advantage of performing well under intermittently disrupted monitoring conditions, which are common in the NICU. By combining GP-based and pressure reactivity index (PRx) features, the ability to predict patient outcomes significantly improved, achieving an AUC of 0.76.

REAL-TIME AI APPLICATIONS IN THE NICU ENVIRONMENT

In complex and unpredictable environments such as the NICU, the speed and accuracy of medical decisions are critical [102,112]. The issue of rapidity and accuracy in the clinical decision-making process is further compounded by the sheer amount of data generated during neuromonitoring and altered levels of consciousness. The Clinical decision support system (CDSS) [86] is intended to improve healthcare delivery by enhancing medical decisions with targeted clinical knowledge, patient information, and other health information [90]. AI plays a variety of roles in CDSS, such as monitoring and analyzing a patient’s condition in real time and predicting future events. AI helps clinicians to quickly recognize situations and make appropriate medical decisions by analyzing large amounts of data in a complex environment.

Signal quality control

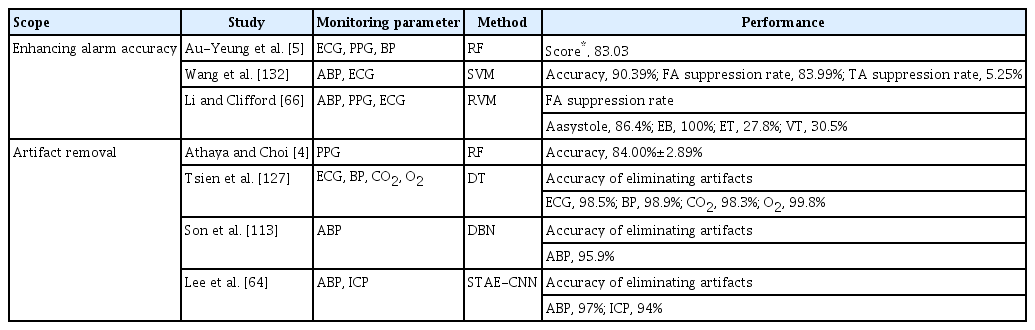

False alarms are prevalent in the NICU, adversely impacting staff alarm responsiveness, degrading the quality of care, and indirectly affecting patients by disrupting their sleep quality [23,25,61,126]. Because of the complexity of neurophysiological signals, simple filter-based artifact rejection techniques are often insufficient. Thus, several studies have proposed methods to minimize false alarms by identifying and removing artifacts that are the main cause of false alarms or by improving alarm accuracy [4,5,64,66,113,127,132] (Table 2). The primary benefit of artifact elimination would be improved signal quality and a subsequent reduction of false alarms, and signal quality control may lead to significant changes in the prognostic values of essential neurophysiological signals. For instance, Kim et al. [55] reported that the association between worse outcomes and the prevalence of hypotension significantly increased after the elimination of artifacts in arterial pressure.

Event prediction

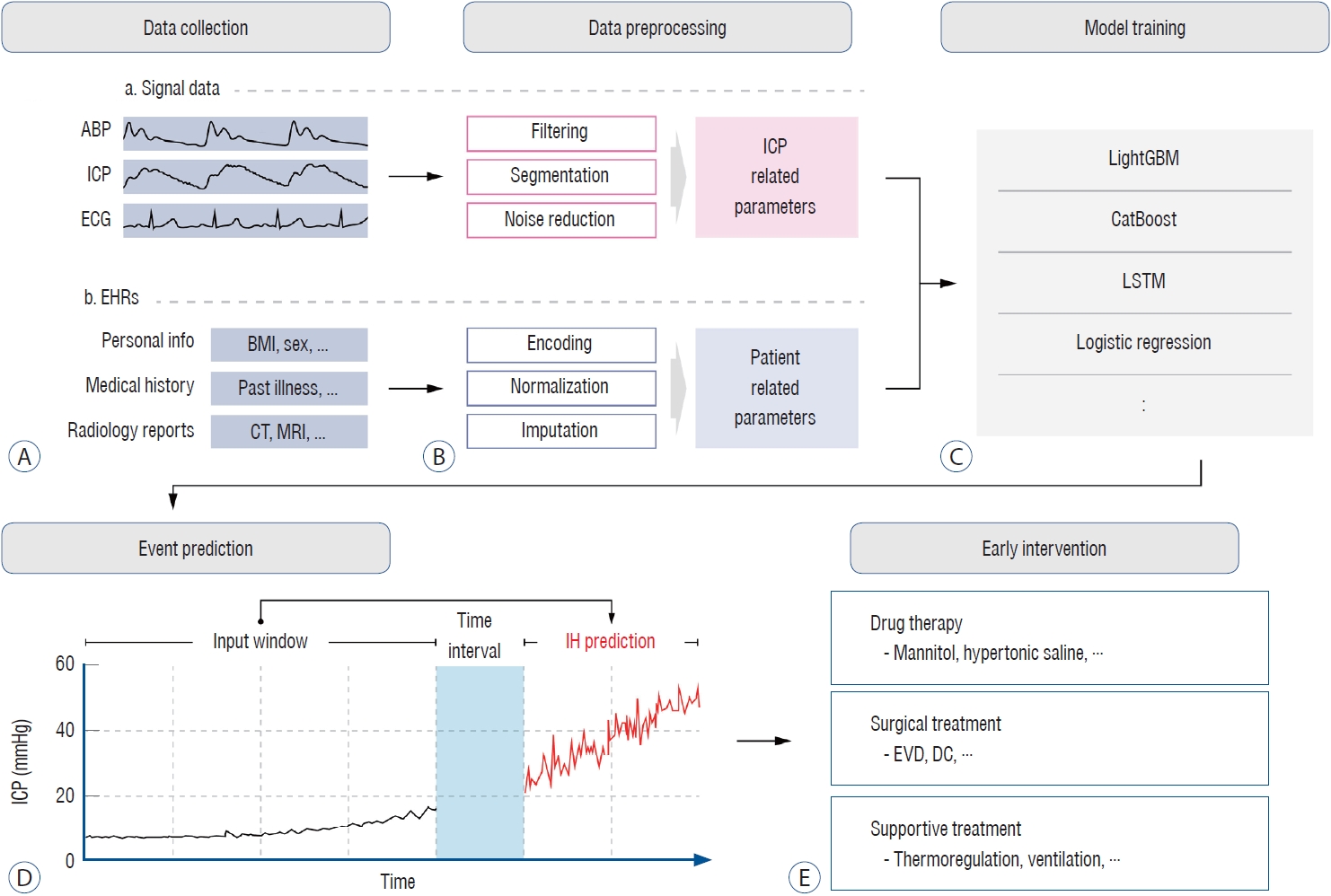

Intracranial hypertension (IH) is common during the acute phase of TBI and can worsen secondary insult [95]. The prediction of IH could allow rapid intervention and, thus, result in a better outcome; hence, it has long been considered an important topic in the neurocritical care fields [12,63]. Schweingruber et al. [108] reported the performance of long short-term memory (LSTM) models in predicting significant events at various time intervals ranging from 1 to 24 hours. These models were trained using demographic variables and were continuously monitored for arterial blood pressure (ABP) and ICP. The model showed robust performance even at a distance of 24 hours, with an AUC of 0.826. However, the most clinically relevant prediction was made 2 hours before the significant event, achieving an AUC of 0.953. Additionally, this study defined two phases of IH : ‘long phase IH,’ lasting more than 2 hours with IH above 22 mmHg, and ‘short phase IH,’ lasting less than 2 hours. The LSTM model predicted the occurrence of both situations at a distance of 2 hours, resulting in AUC of 0.953 and 0.728 for the long and short phases, respectively. Similarly, Güiza et al. [38] developed a model that could predict increased ICP episodes lasting >10 minutes at 30 mmHg, 30 minutes in advance, using only the dynamic characteristics of continuous ICP and MAP. The prediction model was built using multivariate LR and a GP, achieving a classification accuracy of 77%, sensitivity of 82%, and specificity of 75% on the validation set. In addition to predicting simple IH events, Lee et al. [63] focused on predicting ‘life-threatening’ intracranial hypertension (LTH) specifically. In this study, episodes of IH were classified as LTH if they were characterized by a PRx exceeding 0 and a pressure-time dose greater than 5. This study aimed to predict whether ongoing IH conditions would escalate to LTH within 5 minutes. CatBoost was used to predict using ABP and ICP-related parameters. This model achieved an AUC of 0.73 for predicting LTH on the test dataset. Furthermore, numerous studies have developed models using various variables and algorithms to predict ICP episodes early [16,52,75,107,136] (Fig. 1).

A visual representation of the entire process of the ICP related event prediction model. A : Signal data such as ABP, ICP, and ECG, and EHR data such as personal information, medical history, and radiology reports are collected from patients during their hospitalization. B : Before using the collected data as model input, preprocessing is required. Signal data is filtered according to predefined ranges and segmented into windows of specific sizes. Additionally, considering the quality of signal, noise is removed. Non-signal data from patients undergoes processes such as encoding, normalization, and imputation of missing data, depending on the type of data. C : An appropriate model is selected and trained using the processed ICP-related signal parameters and patient health-related parameters. D : This model, by utilizing signal data from specific window and preprocessed EHR data, can predict life-threatening clinical conditions, such as IH, within a defined time interval. E : In a clinical setting, such predictions can assist clinicians in making decisions for early interventions like drug treatment, surgery, and supportive care. ABP : arterial blood pressure, ICP : intracranial pressure, ECG : electrocardiogram, EHRs : electronic health records, BMI : body mass index, CT : computed tomography, MRI : magnetic resonance imaging, LightGBM : light gradient boosting machine, LSTM : long short term memory, IH : intracranial hypertension, EVD : external ventricular drainage, DC : decompressive craniectomy.

Minimally invasive neuromonitoring

Simple clinical assessments may not detect subtle changes in brain physiology or alterations in the neurological status that could appear at a later stage [115]. This is particularly true for TBI, where the patient’s condition may rapidly deteriorate, necessitating the integration of clinical assessments with other neuromonitoring techniques [112]. Even ICP (and CPP to some extent), which has conventionally served as a core measure in TBI, has yielded conflicting results concerning its prognostic value [18,138]. Over the past decade, multimodal neuromonitoring has garnered increasing attention. For example, studies have shown that integrating brain tissue oxygen tension-based treatments with conventional ICP/CPP-oriented protocols leads to a better neurological prognosis and lower death rates [58,99]. Cerebral microdialysis can also provide a better understanding of hypoperfusion and metabolism when complemented by other modalities [74]. Thus, multimodal neuromonitoring provides a more accurate understanding of each patient’s individual circumstances and conditions, paving the way for the delivery of individualized therapy [74]. However, most multimodal monitoring techniques are invasive, resulting in a range of problems. For example, invasive ICP monitoring using a ventricular catheter carries the risk of numerous complications including infection and intracranial hemorrhage [31,112]. Cerebral microdialysis is not an exception to these concerns as it has additional problems such as tissue inflammatory response and biofouling [115]. Therefore, many previous studies have investigated neuromonitoring using minimally invasive or non-invasive methods [3,106,131]. In addition, with the development of AI, it has become possible to non-invasively acquire and analyze accurate signals.

Various technologies spanning fluid dynamics, ophthalmic, otic, and electrophysiologic fields have been proposed for non-invasive ICP monitoring, including magnetic resonance imaging (MRI), transcranial Doppler ultrasound imaging, optic nerve sheath diameter assessment, and others. One of the most traditional methods for non-invasive ICP estimation is the use of cerebral blood flow velocity (CBFV) and ABP [48,51,53]. According to Imaduddin et al. [48], a Pseudo-Bayesian model was used to estimate ICP using non-invasive measurements such as ABP and CBFV. This model showed a mean absolute error (MAE) of 0.6 mmHg for mean ICP estimation, compared to measurements taken invasively. Similarly, Kashif et al. [53] developed a patient-specific model-based algorithm to estimate ICP using routinely collected ABP and CBFV signals, eliminating the need for calibration. Using 35 hours of data from 37 TBI patients, the model achieved ICP estimates with a MAE of 1.6 mmHg and standard deviation of 7.6 mmHg. The best performance of the model was a sensitivity of 90%, a specificity of 80%, and an AUC of 0.88, indicating improved accuracy and reduced variability compared to previous studies [89]. Recently, attempts have been made to estimate the ICP using ECG. In 2021, Sadrawi et al. [105] conducted a study using ECG and a U-Net-based deep convolutional autoencoder system to noninvasively estimate the mean ICP. The mean ICP evaluation had an MAE of 2.404±0.043 mmHg, and Pearson’s linear correlation coefficient was 0.89. Although the results were inferior to those of previous studies that estimated ICP using CBFV and ABP, they are still valuable because they use completely non-invasive ECG signals.

Unfortunately, there are a number of challenges to applying those noninvasive methods in clinical practice. Almost all review articles on this topic have concluded that it is impossible to estimate ICP noninvasively in all situations, for all patients, and over a long period of time without calibration [31,59,94,100,103,141]. For instance, CBFV not only exhibits high intra- and inter-user variability but is also significantly affected by other physiological changes such as medications, and autoregulation. These factors compromise the reliability of CBFV as a source signal, furthermore, making it challenging for long-term continuous use. Additionally, other non-invasive ICP monitoring measures also have distinct limitations such as insufficient validation, inability for continuous monitoring, poor signal quality, variations due to different operator expertise, and inter-observer variability. For example, neurological pupillary index measurement using pupillometry has currently attracted much attention in research and clinical practice [17,49,91], but there is still controversy about its suitability for continuous monitoring, accuracy in reflecting changes in ICP, and precision in estimating ICP [70,101,117].

Despite these limitations, noninvasive methods of measuring ICP can be a viable and attractive alternative for patients who are not amenable to invasive monitoring. Continuous improvements and validations are being made in non-invasive methods, and particularly, the development of multimodal non-invasive neuromonitoring technologies that integrate various methods is expected to significantly reduce existing limitations. The advancement of such technologies will mark an important turning point in non-invasive ICP measurement.

AI-ENHANCED NEUROCRITICAL CARE IN THE NEAR FUTURE

In neurocritical care, clinical decisions require careful consideration of the vast amount of information generated by neuromonitoring and patient management. In this endeavor, several decision support AI applications have been proposed; at present, AI applications have been designed and utilized for relatively limited tasks such as patient monitoring, event prediction, and prognosis forecasting, that is, simple classification tasks [130]. Furthermore, most, if not all, existing AI applications are designed to analyze signals, images, or tabular data separately without integration. However, with rapid advancements in AI technology, newer and more advanced approaches have emerged. GMAI and DT technology are two such examples that hold promise for revolutionizing the landscape of neurocritical care. Although somewhat conceptual, these technologies have the potential to significantly enhance the AI capabilities in this field.

Concepts of DT and GMAI

A DT is a virtual replica of a physical system or object and is a technological concept for efficiently testing and simulating products or processes in a digital environment [62,122]. In the medical field, DT can be used as real-time digital replicates of patients to develop effective treatment strategies. Additionally, it could be useful for optimizing medical processes and hospital management strategies [21].

The first application of DT in the medical field was the Archimedes project in 2003, where a DT approach was used and validated to model the complex management of diabetes [27]. Later, Lal et al. [60] developed a DT model of intensive care unit (ICU) patients to predict the therapeutic response during the first 24 hours of sepsis and conducted pilot testing in an actual ICU. In this study, various technologies such as agent-based modeling, discrete event simulation, and Bayesian networks were used to visualize major organs, including the nervous and cardiovascular systems, and simulate treatment effects. The pilot testing of the DT showed a moderate level of agreement (fair level; Cohen’s kappa, 0.41) for the first response and a significantly high level of agreement (good level; Cohen’s kappa, 0.65) for the second response. In recent years, DT models have been developed in other medical fields, such as cardiology and endocrinology, to provide tailored treatment for patients [20,24,30].

The concept of the GMAI stems from the shared limitations of existing medical AI models, which are designed to perform specific tasks and do not generalize to new tasks or datasets [37,44,92]. GMAI aims to address the limitations of traditional medical AI models and relies on a foundation model pretrained on large amounts of data to flexibly perform various tasks [84]. Conceptually, the GMAI can conduct diverse medical tasks without altering the trained network, thereby ensuring robustness and versatility [67]. According to Moor et al. [84], the tremendous potential of the GMAI can support clinical decision-making by drafting radiology reports considering patient history, summarizing real-time patient data, and complex electronic health records to predict future patient status or compare treatment options. In addition, interactive notetaking would be possible by utilizing the development of voice-to-text models to draft reports based on conversations with clinicians; this advantage of GMAI could also be exploited as chatbots for individual patients.

Promises of DT and GMAI in TBI

With DT and GMAI, clinicians may virtually navigate treatment options, crafting personalized medical plans and rehabilitation programs. Furthermore, patient-friendly chatbots and personalized reports can help patients intuitively understand their own condition, enabling them to participate more actively in their healthcare, treatment and recovery processes. The vast spectrum of symptoms and outcomes in TBI patients, driven by the injury’s cause, location, and severity, demands personalized treatment approaches – a long-standing challenge in this field [73,140]. This heterogeneity in TBI has long been recognized as a significant challenge. By leveraging data on a patient’s demographic characteristics, risk factors, and injury patterns, a DT model can be built to aid in the establishment of personalized treatment plans. McIver [79] proposed a model that creates patient-specific finite element meshes based on patients’ MRI data. The model, by using DT technology, reflects the structure and function of an individual’s brain, and can simulate the effects of various types of head trauma. In addition, it enables proactive identification and prediction of patients who may suffer severe long-term damage from repeated head impacts, contributing to prevention and effective management strategies for TBI. Advances in GMAI may potentially allow for the expansion of AI’s application beyond tasks such as prediction and classification [13,36,84,98]. For instance, by integrating medical imaging with clinical information, GMAI can create integrated radiological reports that include not only predictive analysis but also recommendations for personalized treatment and surgical plans for clinicians. Based on this information, chatbot services can be provided to patients, offering easy-to-understand consultations and personalized health education materials.

DT and GMAI can also be used to monitor rehabilitation status and design personalized rehabilitation programs by comprehensively considering a patient’s medical history, cognitive status, and motor skills. For example, Huanxia [46] presented a method to monitor the gait of patients with gait disorders in real time using a powered exoskeleton and DT technology. The powered exoskeleton supports the patient’s movement, while DT analyzes gait patterns by digitally replicating the patient’s actual physical state. This system is capable of providing fall alerts and evaluating the effectiveness of rehabilitation, thereby aiding clinicians in implementing appropriate interventions based on the patient’s condition.

Pitfalls and remaining challenges

The combination of DT and GMAI could offer significant benefits to both clinicians and patients by supporting medical decision-making at every step and directing personalized care effectively. However, despite their tremendous potential, several challenges must be addressed to implement and utilize these technologies in practice.

Data scale

To develop models that can be utilized for various purposes in the medical field, a wide range of diverse and huge datasets are needed, as well as standardized specifications for signals, devices, and other components to ensure data uniformity. Thus, collaboration between different centers, guidelines, and unified storage methods for data standardization is necessary. Additionally, even when data are available, there are concerns about the substantial costs of model training as the size of the foundation model for DT and GMAI increases, as well as the potential environmental concerns associated with it.

Privacy and security

Medical AI handles sensitive personal information related to patient health. Specifically, the DT and GMAI models require a substantial amount of personal information to achieve high performance. DT represents a virtual replica of an individual, and security becomes an even more critical issue. Hence, relevant data must be collected, stored, and processed in a secure and privacy-compliant manner.

Validation

Because of the complexity of the DT and GMAI models, validation poses significant challenges. Unlike the current task-specific AI models, these models perform diverse tasks, making it highly challenging to anticipate all possible scenarios. In addition, given that the DT and GMAI models directly affect patient health when used in the medical field, the validation process is especially important. Thus, diverse and precise validation procedures are required.

Application

Integration of AI into real medical practice, especially in the NICU for TBI patients, presents significant challenges. First, one of the major challenges for AI applications in clinical settings is the issue of compatibility between software and hardware. An algorithm optimized for a specific system may not function properly in another system. For example, an algorithm trained on a particular MRI scanner device may not work well on a different brand or model of scanner. Second, medical AI is still too immature to function effectively especially, in complex medical situations such as TBI. The inherent learning curve of AI demands a significant amount of data and information for actual clinical application; thus, it would take some time for AI to gain a sufficient level of clinical proficiency. While it is crucial to evaluate and validate the performance of algorithms, such as accuracy, it is more important to validate the compatibility, effectiveness, stability, and other related aspects of algorithms when applied in actual clinical settings. Such validation necessitates close collaboration between doctors, computer scientists, and data managers. This will give us a deep understanding of the clinical value of medical AI technology. Finally, until all of these medical AI validation processes are completed, clinicians should avoid over-reliance on AI decisions and always keep in mind the possibility of errors or misdiagnosis.

CONCLUSION

The application of AI, particularly ML, and concepts such as DT and GMAI hold substantial promise for improving neurocritical care for patients with TBI. It has potential across a wide range of applications from prognostication, decision support, patient specific treatment planning, and even rehabilitation. However, although these opportunities are significant, challenges remain, including the need for high-quality data, privacy concerns, ethical considerations, and further validation of these technologies. Despite these hurdles, the future of AI in neurocritical care seems bright, and continuous research and development in this field is necessary to unlock its full potential.

Notes

Conflicts of interest

No potential conflict of interest relevant to this article was reported.

Informed consent

This type of study does not require informed consent.

Author contributions

Conceptualization : KAK, HK, DJK; Funding acquisition : DJK; Methodology : EJH, BCY; Project administration : HK, DJK; Visualization : KAK; Writing - original draft : KAK; Writing - review & editing : KAK, HK, EJH, BCY, DJK

Data sharing

None

Preprint

None

Acknowledgements

This work was supported by a National Research Foundation of Korea (NRF) Grant funded by the Korean government (Ministry of Science and ICT, MSIT) (No. 2022R1A2C1013205).